[ad_1]

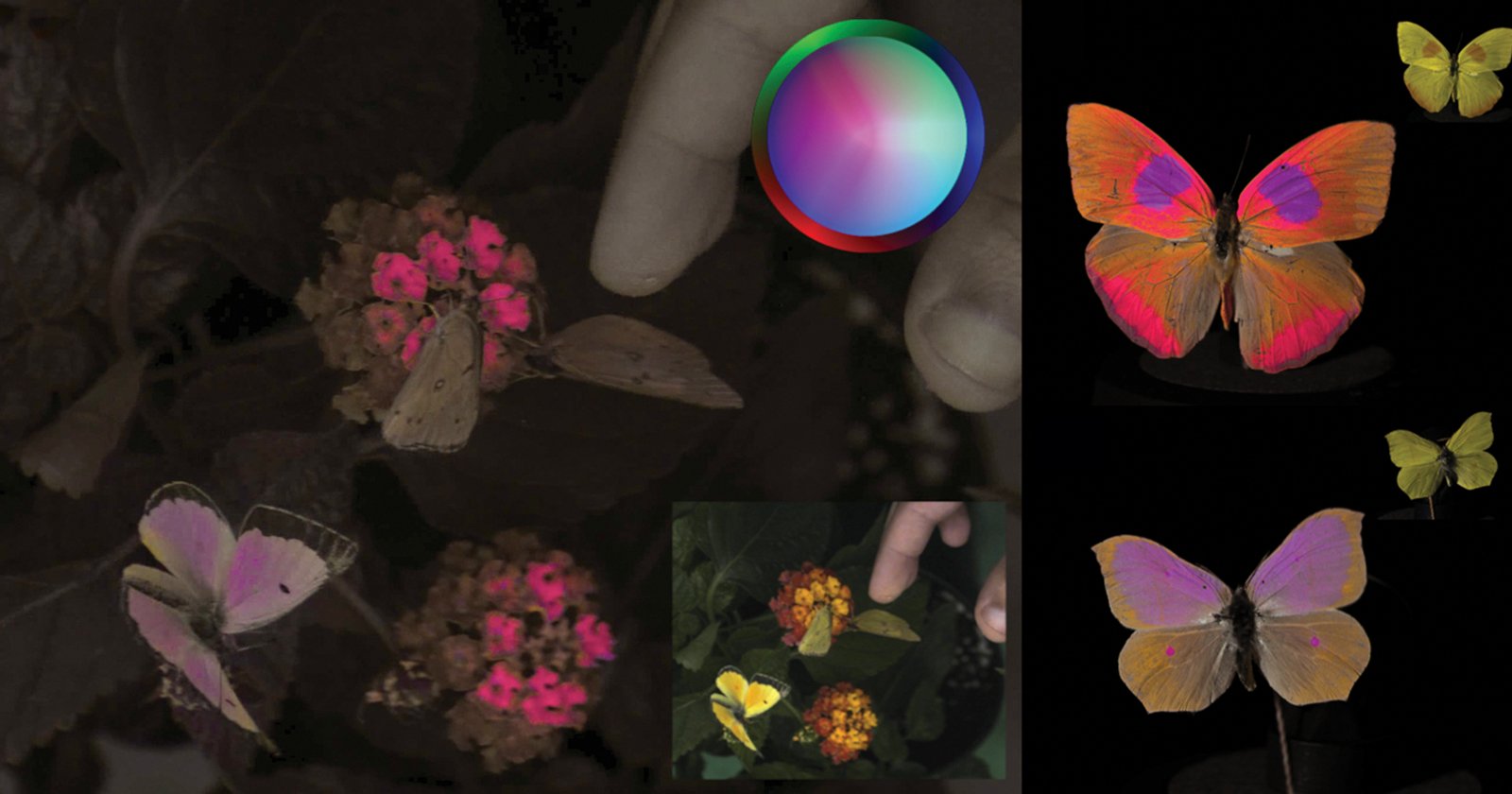

Credit: Daniel Hanley It’s well understood that animals see differently from us humans, but visualizing those differences has proved challenging. However, a group of scientists from the UK and the US have developed a camera system that can accurately record animal-perceived photos and videos. “Each animal possesses a unique set of photoreceptors, with sensitivities ranging from ultraviolet through infrared, adapted to their ecological needs,” explains the scientists behind the recently published study. Some animals are even able to detect polarized light. The result is that every animal uniquely perceives color. Our eyes and commercial cameras can’t pick up those variations in light, however. As Gizmodo reports, until now, scientists have relied on multispectral photography to visualize the light spectrum. This process involves taking a series of photographs in wavelength ranges beyond human-visible photos, typically using a camera sensitive to broadband light and through a succession of narrow-bandpass filters. While this provides fairly accurate color results, multispectral images only work on still objects and don’t allow viewing of temporal signals from animals. “The idea of recording in UV has been around for a long time now, but there have been relatively few attempts due to the technical difficulties involved in it. Interestingly, the first published UV video is from 1969!” Hanley and Vasas tell Gizmodo. “Our new approach provides a valuable degree of scientific accuracy enabling our videos to be used for scientific purposes.” The group’s findings were published in Plos Biology. Their work introduces “hardware and software that provide ecologists and filmmakers the ability to accurately record animal-perceived colors in motion. Specifically, our Python codes transform photos or videos into perceivable units (quantum catches) for animals of known photoreceptor sensitivity,” the study explains.

This figure demonstrates how different a black-eyed Susan flower can look at different light wavelengths. Image credit: Daniel Hanley The technology combines existing photography methods and novel hardware and software. “The system works by splitting light between two cameras, where one camera is sensitive to ultraviolet light while the other is sensitive to visible light. This separation of ultraviolet from visible light is achieved with a piece of optical glass, called a beam splitter. This optical component reflects UV light in a mirror-like fashion, but allows visible light to pass through just the same way as clear glass does,” study authors Daniel Hanley, an associate professor of biology at George Mason University, and Vera Vasas, a biologist at the Queen Mary University of London, told Gizmodo. “In this way the system can capture light simultaneously from four distinct wavelength regions: ultraviolet, blue, green, and red.” After the camera captures the images, the software transforms the data into “perceptual units” corresponding to an animal’s photoreceptor sensitivity. They compared their results to spectrophotometry, the gold standard method for false color images, and found their system was between 92 and 99 percent accurate. This new tool is groundbreaking for research scientists but will also be an excellent tool for creating better nature documentaries. The National Geographic Society provided some of the funding for this research, so it stands to reason that future Nat Geo films may use this tech. The researches explained that they designed the software with end-users in mind, building in automation and interactive platforms where possible. This screenshot from a video shows the iridescent peacock feather through the eyes of four different animals. A is a peafowl Pavo cristatus false color, B is standard human colors, C is honeybee color, and D is a dog’s vision. Image credit: Daniel Hanley “The camera system and the associated software package will allow ecologists to investigate how animals use colors in dynamic behavioral displays, the ways natural illumination alters perceived colors, and other questions that remained unaddressed until now due to a lack of suitable tools,” the abstract of the paper explains. “Finally, it provides scientists and filmmakers with a new, empirically grounded approach for depicting the perceptual worlds of nonhuman animals.”

The researchers were clear that they hope others will replicate their technology. The group used cameras and hardware that are readily available commercially, and they have left the code open-source and publicly available to encourage the community to continue improving their work. Those interested can find the software packaged as a Python library called video2vision. It can be downloaded and installed from the PyPI repository or from GitHub. Image credits: Photographs by Daniel Hanley. The researcher paper, “Recording animal-view videos of the natural world using a novel camera system and software package,” is available on Plos Biology.

[ad_2]