[ad_1]

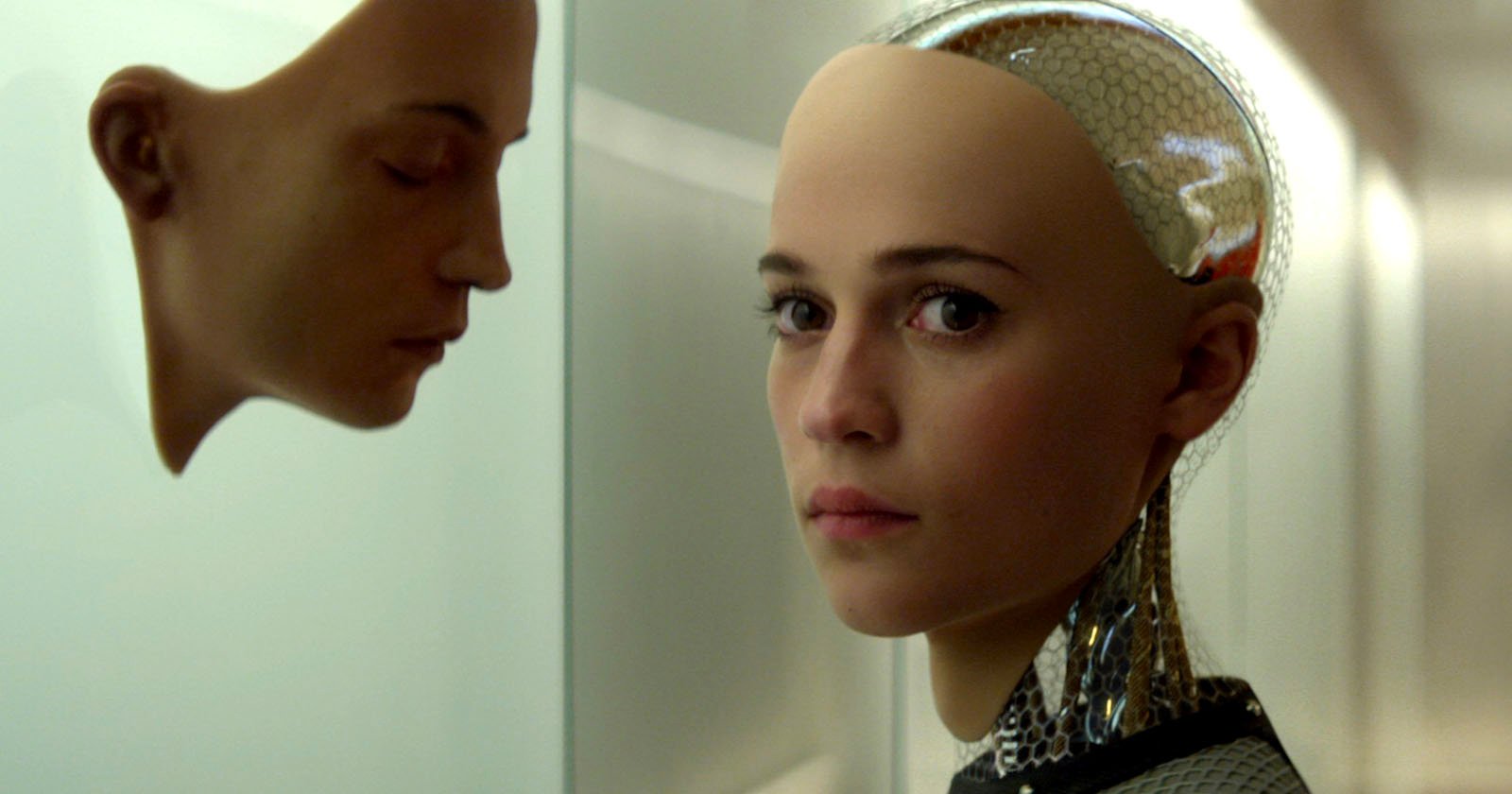

In the film Ex Machina, the protagonist develops feelings for the humanoid robot Ava. | Universal Pictures A research paper from Google’s DeepMind — the company’s AI research laboratory — has warned that humans could become too enamored with AI-powered avatars. Advanced, personalized assistants could be the next frontier of AI technology that may appear in the form of anthropomorphic avatars and “radically alter the nature of work, education, and creative pursuits as well as how we communicate, coordinate, and negotiate with one another, ultimately influencing who we want to be and to become,” the DeepMind researchers say. But there are numerous potential pitfalls: one being that some people could form inappropriately close bonds with the AI assistants — resulting in a loss of autonomy for the human. It could be made worse if the robot assistant has a human-like virtual representation or human-like face such as in the movies Ex Machina and M3gan. The Decoder notes that the risks include negative social consequences for the human and loss of all social ties because the AI replaces all human interaction. A Google engineer named Blake Lemoine was fired after claiming that an AI chatbot program he was working on was sentient.

“I know a person when I talk to it,” Lemoine said in an interview with the Washington Post. “It doesn’t matter whether they have a brain made of meat in their head. Or if they have a billion lines of code. I talk to them. And I hear what they have to say, and that is how I decide what is and isn’t a person.” Dangers and Safety Measues If AI assistants do proliferate then it is inevitable they will begin interacting with one another: this raises all types of questions on whether they will cooperate and coordinate with one another — or start competing against each other. “If they just pursue their users’ interests in a competitive or chaotic manner, clearly that could lead to coordination failures,” Iason Gabriel, a research scientist in the ethics research team at DeepMind and co-author of the paper, tells Axios. The DeepMind team recommends developing comprehensive assessments for the AI and quickly advance the development of socially beneficial AI assistants. “We currently stand at the beginning of this era of technological and societal change. We therefore have a window of opportunity to act now – as developers, researchers, policymakers, and public stakeholders –to shape the kind of AI assistants that we want to see in the world,” the research team writes. The full paper can be read here.

[ad_2]